You tried prompt after prompt and still got vague answers, bland ideas, and wasted time. Same here, until you started using AI prompt package expert tips that cut through the noise. Results snapped into focus, and your workflow finally clicked.

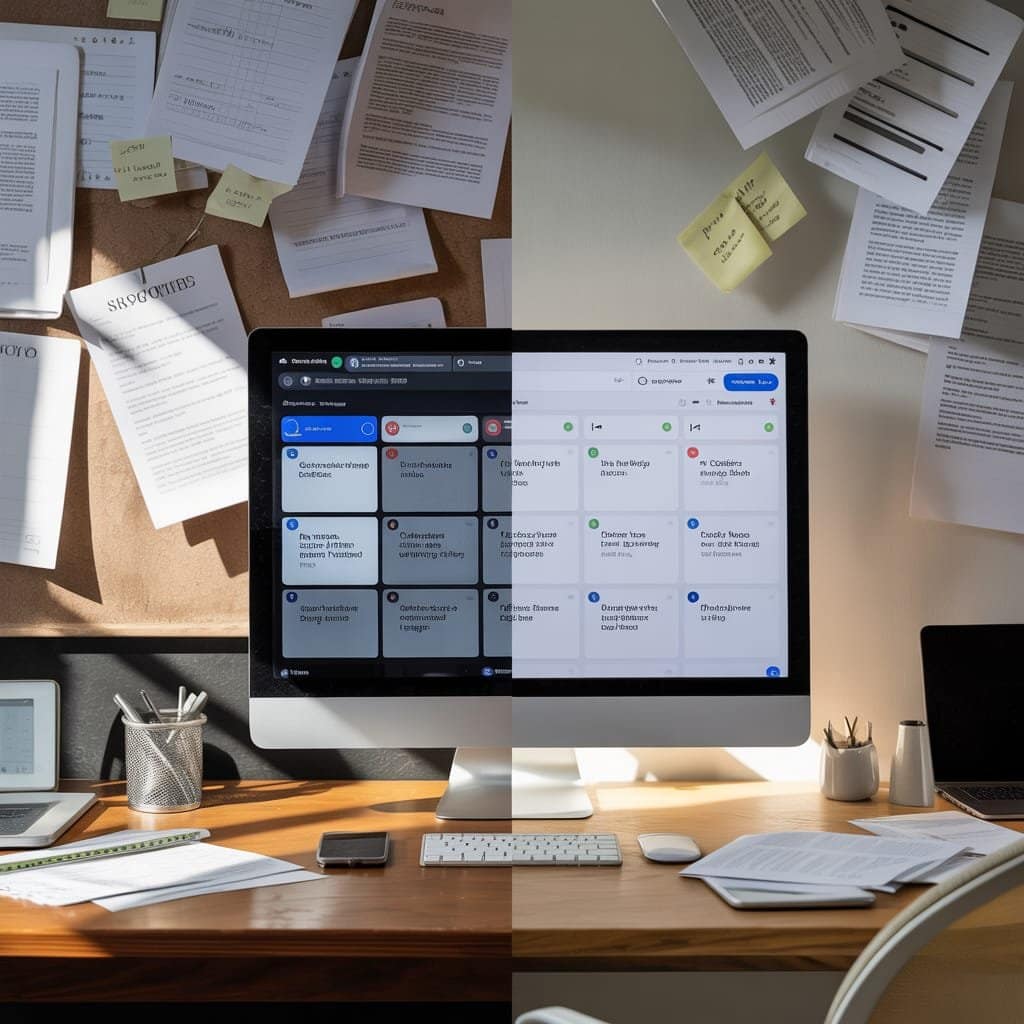

AI prompt packages are simple. They are curated sets of ready-made templates, roles, examples, and strategies you can plug into your tools to get sharper, faster outputs. Think of them as a toolkit you reuse and remix for blogs, scripts, code, briefs, and more.

You will love how these secrets change your AI game. In 2025, the strongest packages use modular parts, few-shot examples, and clear role prompts. They add step-by-step instructions, safe data practices, and simple output formats. You test, compare, and refine with A/B checks, then store the winners.

This post gives you battle-tested moves for content creators, beginners, tech folks, business pros, and prompt engineers. You will see how to wire in chain-of-thought prompts for complex tasks, when to use short vs. long context, and how tools like LangChain and PromptFlow keep everything tidy. Most of all, you will learn to measure quality, not guess it.

If you want a quick spark before we start, watch this short inspo clip: https://www.youtube.com/watch?v=Od3FRMLqwFk

Get ready for practical AI prompt package expert tips you can use today. Up next, you will see a fast glossary, a setup checklist, high-impact templates, test workflows, and a simple scorecard to prove what works.

Master the Basics to Get Started Right

Strong fundamentals power every great prompt package. Use clear instructions, tight structure, and simple formats. You can see huge improvements fast. For a quick boost, try this helpful AI prompt generator tool.

Why Structure and Context Make a Big Difference

Great prompts give the model a job, context, and a format. Add just enough background to anchor the task, then break work into steps. Watch your AI outputs shine!

Try this upgrade path:

- Vague: “Explain photosynthesis.”

- Better: “Explain photosynthesis in simple terms for high school students. Use three bullet points and a short analogy.”

- Best: “Act as a biology tutor. Explain photosynthesis for ninth graders. Use 3 bullets, a one-line metaphor, and a 2-sentence summary.”

For complex tasks, split the flow:

- Outline the steps.

- Ask for the first step only.

- Review, then request the next step.

Tech enthusiasts can add few-shot samples. For example:

- Instruction: “Classify logs as

info,warn, orerror.” - Samples: “2025-01-10 Connected to DB →

info”; “Disk at 92% →warn”; “Null pointer at line 45 →error.”

Balance detail with brevity. If your prompt feels bloated, trim extra words, keep the structure. For a solid primer on effective wording and format, scan MIT’s guide on writing effective prompts.

Customize Prompts for Your Specific Needs

Assign a clear role and you set the tone. You will tailor AI to fit perfectly!

- Business pros: “Act as a marketing expert for B2B SaaS. Create a 5-step email workflow with subject lines, preview text, and one CTA per email.”

- Content creators: “Act as an editor. Tighten this paragraph, keep the voice, return a bulleted changelog.”

- Prompt engineers: “Act as a test runner. Produce three varied outputs, then score clarity, accuracy, and style from 1 to 5.”

Close the loop with quick feedback:

- “Shorter sentences.”

- “More examples, fewer adjectives.”

- “Keep bullets, add a numbered summary.”

Then, save the winner as a template. Keep a note for input length, role, examples, and output format. Rinse and repeat inside your AI prompt package. This is how you stack repeatable wins with real AI prompt package expert tips.

Pro Techniques to Supercharge Your AI Outputs

You are past the basics. It is time to push for sharper results with focused experiments. Mix prompt styles, swap roles, and stitch outputs to reduce errors. Try zero-shot for speed, few-shot for tone control, and conversational prompts to guide the model across steps. Use package templates for content generation, data analysis, and research. Pair that with an effective AI writing prompt formula from your toolkit like this guide on ChatGPT prompts for writing better content. Try this and see the magic! These AI prompt package expert tips will lift your quality fast.

Break Down Tasks with Prompt Chaining

Prompt chaining splits big jobs into small, repeatable steps. You feed the output of one step into the next. This lowers confusion, narrows scope, and boosts accuracy.

For content creators, build a blog post in stages:

- Outline the H2s and H3s.

- Draft the intro in 120 words.

- Write each section to a strict brief.

- Add examples, internal links, and sources.

- Edit for voice, then format for publishing.

You get step-by-step wins! Add few-shot samples to lock voice. Use zero-shot for quick outlines, then switch to conversational prompts for edits and QA. Tech people and prompt engineers can add scaffolding. Assign roles, like Planner, Writer, and Editor, then pass outputs along the chain. For a clear overview of the method, skim IBM’s primer on what prompt chaining is. Aim for small hops, not leaps. Tight hops reduce hallucinations and keep style consistent.

Test and Refine for Top Results

Treat your prompt package like a product. Update your library when models change. Record what breaks. Test edge cases, like super short inputs, tricky formats, or noisy data. Compare zero-shot, few-shot, and role-playing versions against the same task.

Make it a habit:

- Version prompts with dates and tags.

- Log wins, fails, and notes.

- Keep 3 variants per task and A/B/C test weekly.

- Store proven templates for content and data analysis.

Teams should use versioning, pull requests, and simple checklists. Add a scoring sheet for clarity, accuracy, and style. Track response length and token cost too. You will refine like a pro! For a tight refresher on prompt quality, review MIT’s guide to effective prompts for AI. Keep what works, retire the rest, and your outputs stay sharp month after month.

Insider Secrets That Give You the Edge

These secrets will blow you away. You can sharpen quality and cut risk with a few smart habits. Start by guarding against bad inputs. Add input rules, safe words to avoid, and format checks to every template. Use domain-specific prompts that mirror how your team talks, and keep a lightweight glossary inside your package. For quick wins, borrow structure from proven ChatGPT prompts for bloggers, then adapt to your field.

Share your best prompts in a team library. Add tags, version notes, and a feedback form. Engineers can run adversarial tests and bias checks, while beginners use safe templates. In 2025, you get better results by mixing few-shot examples, tight output schemas, and short review loops. Keep a small scoreboard for accuracy, clarity, and style. For a clear rundown of prompt testing methods, skim this guide to AI prompt testing in 2025. These AI prompt package expert tips turn trial and error into steady wins.

Stay Safe and Smart with Edge Case Checks

Edge cases expose where your prompts crack. Test short, messy, and tricky inputs, then lock in fixes. You protect your work easily! Use a simple sweep each time you ship a template:

- Very short input, like one word.

- Long, noisy input with mixed formats.

- Conflicting instructions in the same message.

- Out-of-domain terms or bait for hallucinations.

- Requests for private or banned data.

Beginner example you can try today:

- Prompt: “Act as a concise tutor. Explain ‘quantum computing’ in 3 bullets for a 10-year-old.”

- Edge case: Replace the topic with a nonsense term, like “quantum banana.” The model should respond with “I do not have enough info” instead of making facts up. Add that rule to your system message.

Engineers can automate this with small test suites and bias probes. Keep logs, score results, and save the strong versions. In time, these checks build stable, reliable AI use across your projects.

Conclusion

You started with vague outputs, now you have a clear playbook. With these AI prompt package expert tips, you structure tasks, use few-shot examples, and chain steps for steady gains. You test versions, track wins, and guard against edge cases, so your results stay sharp over time.

Pick one move today, like adding a role, trimming fluff, or scoring outputs. Save the winner as a template, then reuse it across your projects. Keep your library tidy, tag versions, and review weekly. You get faster, cleaner work, and fewer surprises.

Use this quick recap to guide your next runs:

| User Type | High-Impact Tip |

|---|---|

| Content creators | Chain prompts, then lock tone with two strong examples. |

| AI beginners | Give a clear role, set format, and keep inputs short. |

| Tech people | Add schemas, test edge cases, and log token costs. |

| Business pros | Use short briefs, define success criteria, and A/B weekly. |

| Prompt engineers | Version prompts, build test suites, and score clarity and accuracy. |

Try one technique now, then share your wins in the comments. Keep iterating, keep scores, and retire what fails. You are ready to level up your AI skills!

FAQ

Q: What are AI prompt packages? A: AI prompt packages are curated collections of ready-made templates, roles, examples, and strategies designed to help users get better outputs from AI tools like ChatGPT. They include structured prompts that can be reused and customized for various tasks like content creation, coding, and business workflows.

Q: How do I improve my AI prompt quality? A: Improve AI prompt quality by: 1) Assigning clear roles, 2) Providing context and structure, 3) Using few-shot examples, 4) Breaking complex tasks into steps (prompt chaining), 5) Testing and refining with A/B comparisons, and 6) Specifying output format clearly.

Q: What is prompt chaining? A: Prompt chaining is a technique where you break large tasks into smaller, sequential steps. Each prompt’s output becomes the input for the next prompt. This reduces errors, maintains consistency, and produces higher-quality results for complex projects.

Q: What’s the difference between few-shot and zero-shot prompting? A: Zero-shot prompting gives instructions without examples—fast but less controlled. Few-shot prompting includes 2-3 examples of desired outputs, which helps AI understand tone, format, and style better. Use zero-shot for quick tasks, few-shot when consistency matters.

Q: How often should I test and update my prompts? A: Test prompts regularly, especially when AI models update. Best practice: Keep 3 variants per task, run A/B tests weekly, version your prompts with dates, and retire what doesn’t perform. Review your prompt library monthly.