Imagine seeing a video of your favorite politician saying something outrageous. What if that video wasn’t real? This isn’t some far-off future; it’s happening now. Artificial intelligence has become a powerful tool in shaping public opinion, and it’s being used in ways that threaten democracy itself.

Recent examples, like a fake video of a presidential candidate created with generative AI ahead of the 2024 election, show how dangerous this can be. Experts like Thomas Scanlon and Randall Trzeciak warn that deepfakes and AI-generated misinformation could sway election outcomes and erode trust in the political process.

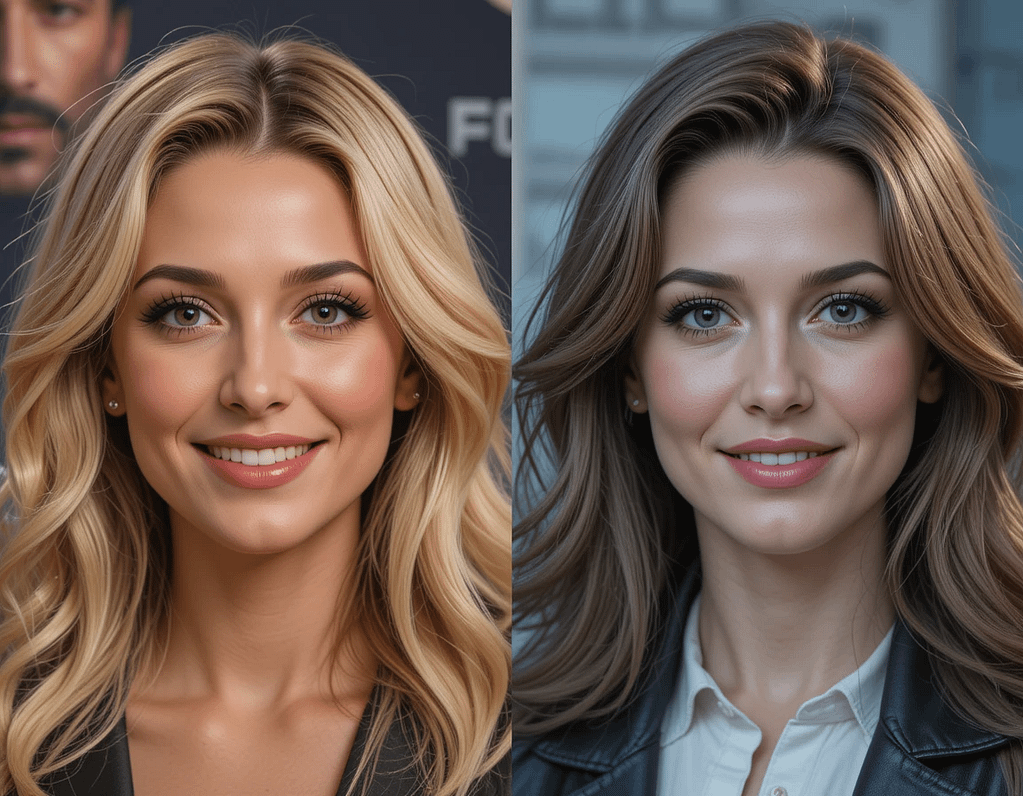

These manipulated videos, known as deepfakes, are so realistic that they can fool even the most discerning eye. They allow politicians to spread false narratives, making it seem like their opponents are saying or doing things they never did. This kind of misinformation can have serious consequences, influencing voters’ decisions and undermining the integrity of elections.

As we approach the next election cycle, it’s crucial to stay vigilant. The line between fact and fiction is blurring, and the stakes have never been higher. By understanding how these technologies work and being cautious about the information we consume, we can protect the heart of our democracy.

Stay informed, verify sources, and together, we can safeguard our democratic processes from the growing threat of AI-driven manipulation.

Overview of AI in Political Campaigns

Modern political campaigns have embraced technology like never before. AI tools are now central to how candidates engage with voters and shape their messages. From crafting tailored content to analyzing voter behavior, these systems have revolutionized the political landscape.

The Emergence of AI in Politics

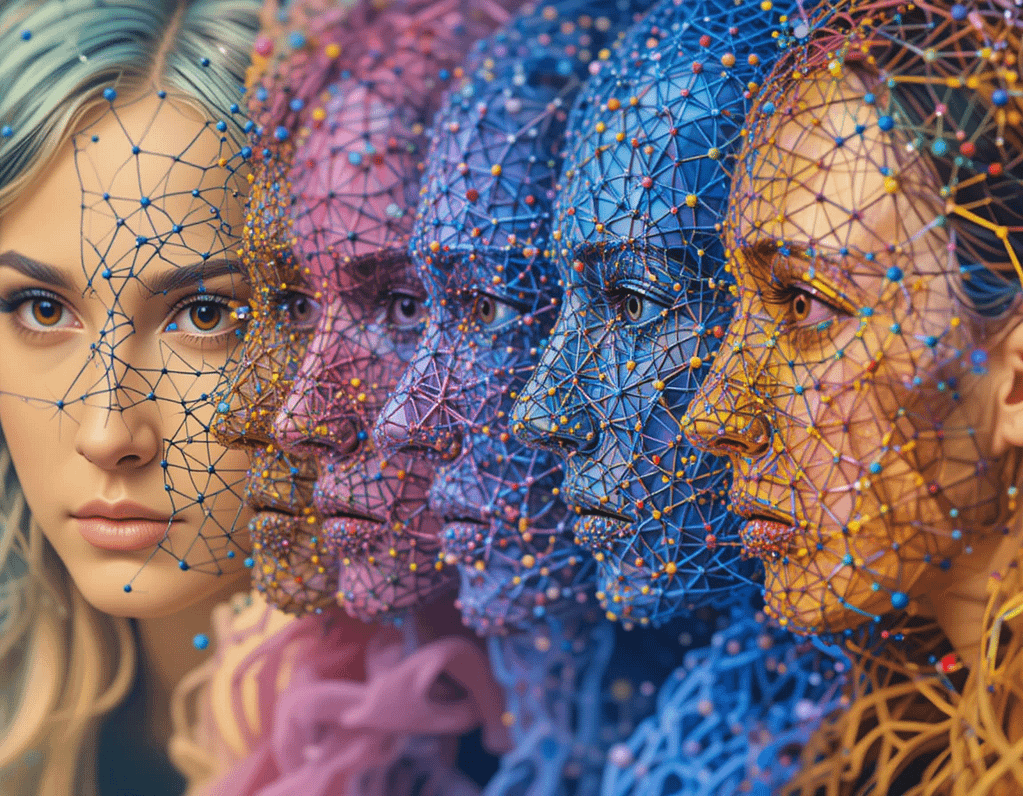

What started as basic photo-editing tools has evolved into sophisticated generative AI. Today, platforms like social media and generative systems enable rapid creation of politically charged content. For instance, ChatGPT can draft speeches, while deepfake technology creates realistic videos, blurring the line between reality and fiction.

Understanding Generative AI Tools

Generative AI uses complex algorithms to produce realistic media. These tools can create convincing videos or audio clips, making it hard to distinguish fact from fiction. Institutions like Heinz College highlight how such technologies can be misused on social media, spreading misinformation quickly.

The transition from traditional image manipulation to automated, algorithm-driven content creation marks a significant shift. This evolution raises concerns about the integrity of political discourse and the potential for manipulation.

Politicians Are Using AI Against You – Here’s the Proof!

Imagine a world where a video of your favorite politician saying something shocking isn’t real. This isn’t science fiction—it’s our reality now. Deepfakes, powered by AI-generated content, are reshaping political landscapes by spreading false information at an alarming rate.

A recent example is a fabricated video of a presidential candidate created with generative AI ahead of the 2024 election. This deepfake aimed to mislead voters by presenting the candidate in a false light. Similarly, manipulated speeches using generative AI systems have further blurred the lines between reality and fiction.

| Aspect | Details |

|---|---|

| Definition | Deepfakes are AI-generated videos that manipulate audio or video content. |

| Example | Fabricated video of a presidential candidate. |

| Impact | Spreads false information, influencing voter decisions. |

| Creation | Uses complex algorithms to produce realistic media. |

These technologies allow for rapid creation and sharing of deceptive content, making it harder to distinguish fact from fiction. As we approach the next election, it’s crucial to recognize and verify AI-generated content to protect our democracy.

The Rise of AI-Powered Propaganda

AI-powered propaganda is reshaping how political messages are spread. By leveraging advanced algorithms, political campaigns can craft tailored narratives that reach specific audiences with precision. This shift has made it easier to disseminate information quickly and broadly.

Deepfakes and Synthetic Media

Deepfakes are a prime example of synthetic media. They manipulate images and audio to create convincing but false content. For instance, a deepfake might show a public figure making statements they never actually made. These creations are so realistic that they can easily deceive even the most discerning viewers.

Effects on Public Opinion and Trust

The impact of deepfakes and synthetic media on public trust is significant. When false information spreads, it can erode confidence in institutions and leaders. Recent incidents have shown how manipulated media can sway public opinion, leading to confusion and mistrust in the political process.

Coordinated groups can amplify these effects, using deepfakes to spread disinformation on a large scale. This poses a significant risk to the integrity of elections and democratic systems. As these technologies evolve, the challenge of identifying and countering false information becomes increasingly complex.

Identifying AI-Generated Content

As technology advances, distinguishing between real and AI-generated content is becoming increasingly challenging. However, with the right knowledge, you can protect yourself from misinformation.

Recognizing Deepfake Indicators

Experts highlight several red flags that may indicate a deepfake:

| Indicator | Details |

|---|---|

| Jump Cuts | Sudden, unnatural transitions in the video. |

| Lighting Inconsistencies | Lighting that doesn’t match the surroundings. |

| Mismatched Reactions | Facial expressions that don’t align with the audio. |

| Unnatural Movements | Stiff or robotic body language. |

Best Practices for Verification

To verify the authenticity of political media, follow these steps:

- Check the source by looking for trusted watermarks or official channels.

- Use fact-checking websites to verify the content’s legitimacy.

- Examine user comments for others’ observations about the media.

Stay vigilant, especially during voting periods, and report suspicious content to help curb misinformation.

Legislative and Regulatory Responses

Governments are taking action to address the misuse of AI in politics. States and federal agencies are introducing new laws and regulations to protect voters and ensure fair campaigns.

State-Level Laws and Initiatives

Several states have introduced legislation to combat AI-driven misinformation. For example, Pennsylvania proposed a bill requiring AI-generated political content to be clearly labeled. This law aims to prevent voters from being misled by deepfakes or synthetic media.

California has taken a different approach, focusing on transparency in political advertising. A new law mandates that any campaign using AI to generate content must disclose its use publicly. These state-level efforts show a growing commitment to protecting democratic processes.

Challenges in Federal Regulation

While states are making progress, federal regulation faces significant hurdles. The rapid evolution of AI technology makes it difficult for laws to keep up. Experts warn that overly broad regulations could stifle innovation while failing to address the root issues.

“The federal government must balance innovation with regulation,” says Dr. Emily Carter, a legal expert on technology. “It’s a complex issue that requires careful consideration to avoid unintended consequences.”

Despite these challenges, there is a pressing need for federal action. Without a coordinated effort, the risks posed by AI in politics will continue to grow. By learning from state initiatives and engaging in bipartisan discussions, lawmakers can create effective solutions that protect voters while promoting innovation.

How AI is Shaping Election Strategies

Modern political campaigns are increasingly turning to AI to refine their strategies and connect with voters more effectively. This shift marks a new era in how elections are won and lost.

Innovative Campaign Tactics

AI tools are being used to craft hyper-personalized messages, allowing campaigns to target specific voter groups with precision. For instance, AI analyzes voter data to create tailored ads that resonate deeply with individual preferences. This approach has proven effective in driving engagement and support.

Risks of Tailor-Made Misinformation

While AI offers innovative strategies, it also poses significant risks. The ability to create customized messages can be exploited to spread misinformation. On election day, false narratives tailored to specific demographics can influence voter decisions, undermining the electoral process.

As we move through the election year, the real-time adjustment of campaign messages using AI becomes more prevalent. This dynamic approach allows campaigns to respond swiftly to trends and issues, enhancing their agility in a fast-paced political environment.

Social Media Platforms and AI Misinformation

Social media platforms have become central to how information spreads. However, they also face challenges in controlling AI-generated misinformation. Major companies are now taking steps to address this issue.

Platform Policies and Digital Accountability

Companies like Meta, X, TikTok, and Google are introducing policies to tackle AI-driven misinformation. Meta uses digital credentials to label AI-generated content, helping users identify manipulated media. X has implemented a system to flag deepfakes, reducing their spread. TikTok employs content labeling to alert users about synthetic media, while Google focuses on removing election-related misinformation through advanced detection tools.

| Company | Initiative |

|---|---|

| Meta | Digital credentials for AI content |

| X | Flagging deepfakes |

| TikTok | Content labeling |

| Advanced detection tools |

User Responsibilities in the Age of AI

Users play a crucial role in managing AI misinformation. They should verify information through trusted sources and fact-checking websites. Examining user comments can also provide insights. Being cautious and responsible when sharing content helps prevent the spread of false information.

- Check sources for trusted watermarks or official channels.

- Use fact-checking websites to verify content legitimacy.

- Look at user comments for others’ observations.

Conclusion

As we’ve explored, the misuse of advanced algorithms in politics poses a significant threat to global democracy. Deepfakes and manipulated media, created by sophisticated systems, can spread false information quickly, influencing elections around the world. Every person has a responsibility to verify the content they consume online, ensuring they’re not misled by deceptive material.

The challenges posed by these technologies are not limited to one country. From the United States to nations around the world, the impact of AI-driven misinformation is evident. It’s crucial for policymakers, tech companies, and individuals to collaborate, restoring trust in our information ecosystem. By staying informed and proactive, we can address these challenges head-on.

Take the sign to educate yourself about AI’s role in politics. Together, we can create a more transparent and accountable digital landscape, safeguarding the integrity of elections worldwide.