Black box AI systems make billions of decisions daily, yet scientists cannot fully explain how these systems arrive at their conclusions. While artificial intelligence continues to achieve breakthrough results in everything from medical diagnosis to autonomous driving, the underlying logic remains surprisingly opaque. Despite their impressive capabilities, modern neural networks operate like sealed machines – data goes in, decisions come out, but the internal reasoning process stays hidden from view.

Today’s AI transparency challenges extend far beyond simple curiosity about how these systems work. Understanding the decision-making process of AI has become crucial for ensuring safety, maintaining accountability, and building trust in automated systems. This article explores the complex architecture behind black box AI, examines current interpretability challenges, and reviews emerging technical solutions that aim to shed light on AI reasoning. We’ll also analyze the limitations of existing methods and discuss why cracking the black box problem remains one of artificial intelligence’s most pressing challenges.

Understanding Black Box AI Architecture

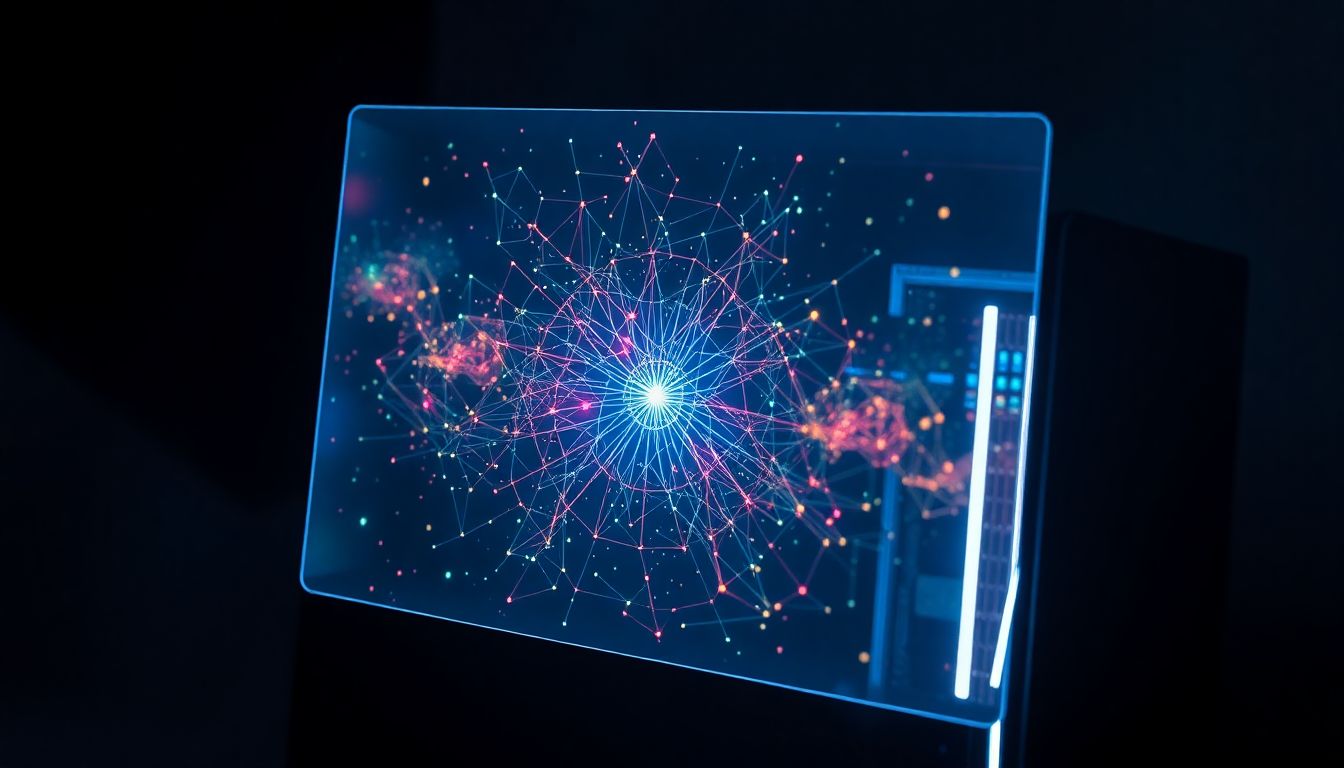

Modern black box AI systems rely on sophisticated neural networks that process information through multiple interconnected layers. These networks contain thousands of artificial neurons working together to identify patterns and make decisions, fundamentally different from traditional programming approaches.

Neural Network Structure Basics

Neural networks mirror the human brain’s architecture through layers of interconnected nodes called artificial neurons [1]. Each network consists of three primary components: an input layer that receives data, hidden layers that process information, and an output layer that produces results. The hidden layers perform complex computations by applying weighted calculations and activation functions to transform input data [2].

The strength of connections between neurons, known as synaptic weights, determines how information flows through the network. These weights continuously adjust during training to improve the network’s accuracy [2]. Furthermore, each neuron contains a bias term that allows it to shift its output, adding another layer of complexity to the model’s decision-making process.

Deep Learning vs Traditional Programming

Deep learning represents a significant departure from conventional programming methods. Traditional programs rely on explicit rules and deterministic outcomes, where developers must code specific instructions for each scenario [3]. In contrast, deep learning models learn patterns directly from data, enabling them to handle complex problems without explicit programming for every possibility.

The key distinction lies in their approach to problem-solving. Traditional programming produces fixed solutions requiring manual updates, whereas machine learning algorithms adapt to new data and continuously improve their performance [4]. This adaptability makes deep learning particularly effective for tasks involving pattern recognition, natural language processing, and complex decision-making scenarios.

Key Components of Modern AI Systems

Modern AI systems integrate several essential components that work together to enable sophisticated decision-making capabilities:

Data Processing Units: These handle the initial input and transform raw data into a format suitable for analysis [5].

Learning Algorithms: The system employs various learning approaches, including:

Supervised learning with labeled data

Unsupervised learning for pattern discovery

Reinforcement learning through environmental feedback [5]

The system’s problem-solving capabilities stem from specialized techniques like planning, search, and optimization algorithms [5]. Additionally, modern AI incorporates natural language processing and computer vision components, enabling it to understand human language and interpret visual information effectively.

Each layer in a deep neural network contains multiple neurons that process increasingly complex features of the input data [6]. Through these layers, the network can analyze raw, unstructured data sets with minimal human intervention, leading to advanced capabilities in language processing and content creation [6]. Nevertheless, this sophisticated architecture creates inherent opacity, as even AI developers can only observe the visible input and output layers, while the processing within hidden layers remains largely inscrutable [6].

Current Interpretability Challenges

Interpreting the decision-making process of artificial intelligence systems presents significant technical hurdles that researchers continue to address. These challenges stem from the inherent complexity of modern AI architectures and their data-driven nature.

Model Parameter Complexity

The sheer scale of parameters in contemporary AI models creates fundamental barriers to understanding their operations. Modern language models contain billions or even trillions of parameters [7], making it impossible for humans to comprehend how these variables interact. For a single layer with just 10 parameters, there exist over 3.5 million possible ways of permuting weights [8], highlighting the astronomical complexity at play.

Moreover, these parameters function like intricate knobs in a complex machine, loosely connected to the problems they solve [9]. When models grow larger, they become more accurate at reproducing training outputs, yet simultaneously more challenging to interpret [10]. This complexity often leads to overfitting issues, where models memorize specific examples rather than learning underlying patterns [7].

Training Data Opacity Issues

The lack of transparency regarding training data poses substantial challenges for AI interpretation. Training datasets frequently lack proper documentation, with license information missing in more than 70% of cases [11]. This opacity creates multiple risks:

Potential exposure of sensitive information

Unintended biases in model behavior

Compliance issues with emerging regulations

Legal and copyright vulnerabilities [11]

Furthermore, the continuous training or self-learning nature of algorithms compounds these challenges, as explanations need constant updates to remain relevant [10]. The dynamic nature of AI systems means they learn from their own decisions and incorporate new data, making their decision-making processes increasingly opaque over time [10].

Processing Layer Visibility Problems

The internal representation of non-symbolic AI systems contains complex non-linear correlations rather than human-readable rules [10]. This opacity stems from several factors:

First, deep neural networks process information through multiple hidden layers, making it difficult to trace how initial inputs transform into final outputs [12]. The intricate interactions within these massive neural networks create unexpected behaviors not explicitly programmed by developers [13].

Second, the complexity of these systems often leads to what researchers call “ghost work” – hidden processes that remain invisible even to the systems’ creators [14]. This invisibility extends beyond technical aspects, as AI systems frequently make decisions based on factors that humans cannot directly observe or comprehend [15].

Significantly, excessive information can impair decision-making capabilities [15]. AI systems must adapt to human cognitive limitations, considering when and how much information should be presented to decision-makers [15]. This balance between complexity and comprehensibility remains a central challenge in developing interpretable AI systems.

Research Breakthroughs in AI Transparency

Recent advances in AI research have unlocked promising methods for understanding the inner workings of neural networks. Scientists are steadily making progress in decoding the decision-making processes within these complex systems.

Anthropic’s Feature Detection Method

Anthropic researchers have pioneered an innovative approach to decode large language models through dictionary learning techniques. This method treats artificial neurons like letters in Western alphabets, which gain meaning through specific combinations [16]. By analyzing these neural combinations, researchers identified millions of features within Claude’s neural network, creating a comprehensive map of the model’s knowledge representation [16].

The team successfully extracted activity patterns that correspond to both concrete and abstract concepts. These patterns, known as features, span across multiple domains – from physical objects to complex ideas [1]. Most notably, the researchers discovered features related to safety-critical aspects of AI behavior, such as deceptive practices and potentially harmful content generation [16].

Through careful manipulation of these identified features, scientists demonstrated unprecedented control over the model’s behavior. By adjusting the activity levels of specific neural combinations, they could enhance or suppress particular aspects of the AI’s responses [1]. For instance, researchers could influence the model’s tendency to generate safer computer programs or reduce inherent biases [16].

Neural Network Visualization Tools

Significant progress has been made in developing tools that make neural networks more transparent. These visualization techniques provide crucial insights into how AI systems process and analyze information:

TensorBoard enables real-time exploration of neural network activations, allowing researchers to witness the model’s decision-making process in action [17]

DeepLIFT compares each neuron’s activation to its reference state, establishing traceable links between activated neurons and revealing dependencies [18]

The development of dynamic visual explanations has proven particularly valuable in critical domains like healthcare. These tools enable medical professionals to understand how AI systems reach diagnostic conclusions, fostering a collaborative environment between human experts and artificial intelligence [19].

Visualization techniques serve multiple essential functions in understanding AI systems:

Training monitoring and issue diagnosis

Model structure analysis

Performance optimization

Educational purposes for students mastering complex concepts [20]

These tools specifically focus on uncovering data flow within models and providing insights into how structurally identical layers learn to focus on different aspects during training [20]. Consequently, data scientists and AI practitioners can obtain crucial insights into model behavior, identify potential issues early in development, and make necessary adjustments to improve performance [20].

The combination of feature detection methods and visualization tools marks a significant step forward in AI transparency. These advances not only help researchers understand how AI systems function at a deeper level but accordingly enable more effective governance and regulatory compliance [21]. As these technologies continue to evolve, they promise to make AI systems increasingly interpretable while maintaining their sophisticated capabilities.

Technical Solutions for AI Interpretation

Technological advancements have produced several powerful tools and frameworks that help decode the complex decision-making processes within artificial intelligence systems. These solutions offer practical approaches to understanding previously opaque AI operations.

LIME Framework Implementation

Local Interpretable Model-agnostic Explanations (LIME) stands as a groundbreaking technique for approximating black box AI predictions. This framework creates interpretable models that explain individual predictions by perturbing original data points and observing corresponding outputs [3]. Through this process, LIME weighs new data points based on their proximity to the original input, ultimately fitting a surrogate model that reveals the reasoning behind specific decisions.

The framework operates through a systematic approach:

Data perturbation and analysis

Weight assignment based on proximity

Surrogate model creation

Individual prediction explanation

LIME’s effectiveness stems from its ability to work with various types of data, including text, images, and tabular information [22]. The framework maintains high local fidelity, ensuring explanations accurately reflect the model’s behavior for specific instances.

Explainable AI Tools

Modern explainable AI tools combine sophisticated analysis capabilities with user-friendly interfaces. ELI5 (Explain Like I’m 5) and SHAP (Shapley Additive exPlanations) represent two primary frameworks integrated into contemporary machine learning platforms [3]. These tools enable data scientists to examine model behavior throughout development stages, ensuring fairness and robustness in production environments.

SHAP, based on game theory principles, computes feature contributions for specific predictions [23]. This approach delivers precise explanations by:

Analyzing feature importance

Calculating contribution values

Providing local accuracy

Maintaining additive attribution

Model Debugging Approaches

Effective model debugging requires a multi-faceted strategy to identify and resolve performance issues. Cross-validation techniques split data into multiple subsets, enabling thorough evaluation of model behavior across different scenarios [4]. Validation curves offer visual insights into performance patterns as training data size varies.

Feature selection and engineering play crucial roles in model optimization. These processes involve:

Identifying relevant features

Transforming existing attributes

Creating new informative variables

Addressing data imbalance issues [4]

Model assertions help improve predictions in real-time, alongside anomaly detection mechanisms that identify unusual behavior patterns [24]. Visualization techniques prove invaluable for debugging, allowing developers to observe input and output values during execution. These tools enable precise identification of error sources and data modifications throughout the debugging process [24].

Modular debugging approaches break AI systems into smaller components, such as data preprocessing and feature extraction units [25]. This systematic method ensures thorough evaluation of each system component, leading to more reliable and accurate models. Through careful implementation of these technical solutions, developers can create more transparent and trustworthy AI systems that maintain high performance standards.

Limitations of Current Methods

Current methods for understanding black box AI face substantial barriers that limit their practical application. These constraints shape how effectively we can interpret and scale artificial intelligence systems.

Computational Resource Constraints

The computational demands of modern AI systems present formidable challenges. Training large-scale models requires immense processing power, often consuming electricity equivalent to that of small cities [26]. The hardware requirements have grown exponentially, with compute needs doubling every six months [26], far outpacing Moore’s Law for chip capacity improvements.

Financial implications remain equally daunting. The final training run of GPT-3 alone cost between $500,000 to $4.6 million [5]. GPT-4’s training expenses soared even higher, reaching approximately $50 million for the final run, with total costs exceeding $100 million when accounting for trial and error phases [5].

Resource scarcity manifests through:

Limited availability of state-of-the-art chips, primarily Nvidia’s H100 and A100 GPUs [5]

High energy consumption leading to substantial operational costs [27]

Restricted access to specialized computing infrastructure [5]

Scalability Issues with Large Models

As AI models grow in size and complexity, scalability challenges become increasingly pronounced. The Chinchilla paper indicates that compute and data must scale proportionally for optimal model performance [28]. However, the high-quality, human-created content needed for training has largely been consumed, with remaining data becoming increasingly repetitive or unsuitable [28].

The scalability crisis extends beyond mere size considerations. Training Neural Network models across thousands of processes presents significant technical hurdles [29]. These challenges stem from:

Bottlenecks in distributed AI workloads

Cross-cloud data transfer latency issues

Complexity in model versioning and dependency control [6]

Most current interpretability methods become unscalable when applied to large-scale systems or real-time applications [30]. Even minor adjustments to learning rates can lead to training divergence [29], making hyper-parameter tuning increasingly sensitive at scale. The deployment of state-of-the-art neural network models often proves impossible due to application-specific thresholds for latency and power consumption [29].

Essentially, only a small global elite can develop and benefit from large language models due to these resource constraints [31]. Big Tech firms maintain control over large-scale AI models primarily because of their vast computing and data resources, with estimates suggesting monthly operational costs of $3 million for systems like ChatGPT [31].

Conclusion

Understanding black box AI systems remains one of artificial intelligence’s most significant challenges. Despite remarkable advances in AI transparency research, significant hurdles persist in decoding these complex systems’ decision-making processes.

Recent breakthroughs, particularly Anthropic’s feature detection method and advanced visualization tools, offer promising pathways toward AI interpretability. These developments allow researchers to map neural networks’ knowledge representation and track information flow through multiple processing layers. Technical solutions like LIME and SHAP frameworks provide practical approaches for explaining individual AI decisions, though their effectiveness diminishes with larger models.

Resource constraints and scalability issues present substantial barriers to widespread implementation of interpretable AI systems. Computing requirements continue doubling every six months, while high-quality training data becomes increasingly scarce. These limitations restrict advanced AI development to a small group of well-resourced organizations, raising questions about accessibility and democratization of AI technology.

Scientists must balance the drive for more powerful AI systems against the need for transparency and interpretability. As artificial intelligence becomes more integrated into critical decision-making processes, the ability to understand and explain these systems grows increasingly vital for ensuring safety, accountability, and public trust.